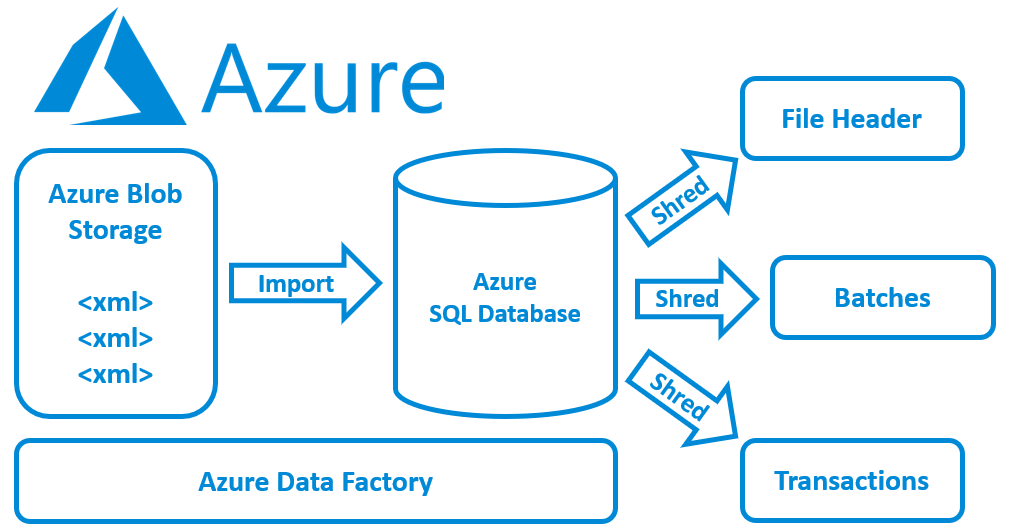

Within this blog, the goal is to load multiple XML files into a database in such a manner, that we can shred the XML structure across multiple tables easily. Added complexity is that the XML file names differ each delivery. This is quite a common case, for instance within bank payment flows. So how can this be arranged in Azure?

Non-Azure architecture

For reference purposes, let’s see how this is done in a non-Azure architecture. Because there it is very straightforward. For instance, XML files are delivered on a network location within your company network. A database like SQL Server is located within the same company network, and it requests the list of files from the network location. The only thing that SQL Server needs to do next, is loop through the list of files, and load the XML files into a table with data type XML. The next step is to shred XML contents into separate tables. This shredding will need explanation, but that’s not the purpose of this blog.

Azure architecture

This blog is about the case in an Azure environment. The XML files are stored in a folder on the Azure BLOB Storage and the target database is Azure SQL Database. Not SQL Server, but truly SQL Database of Azure. Scalability, faster release cycles, and cost reduction are some of the benefits mentioned for Azure.

I wrote this blog, because whilst importing multiple files in Azure can be done, quite some limitations of individual components are encountered for our specific case. For instance, a totally different concept is required to determine the list of XML files. I will use Azure Data Factory for this which is another Azure Service offered by Microsoft. And some very specific settings.

Importing a single XML into Azure SQL Database

Let’s start with the basics. Importing a single XML file from Azure BLOB Storage into Azure SQL Database. In Azure SQL Database we will have to create an External Data Source. An External Data Source can be created for multiple sources, like HADOOP, a different Azure SQL Database, or BLOB Storage. We will use this last type to import files from Azure BLOB Storage into Azure SQL Database.

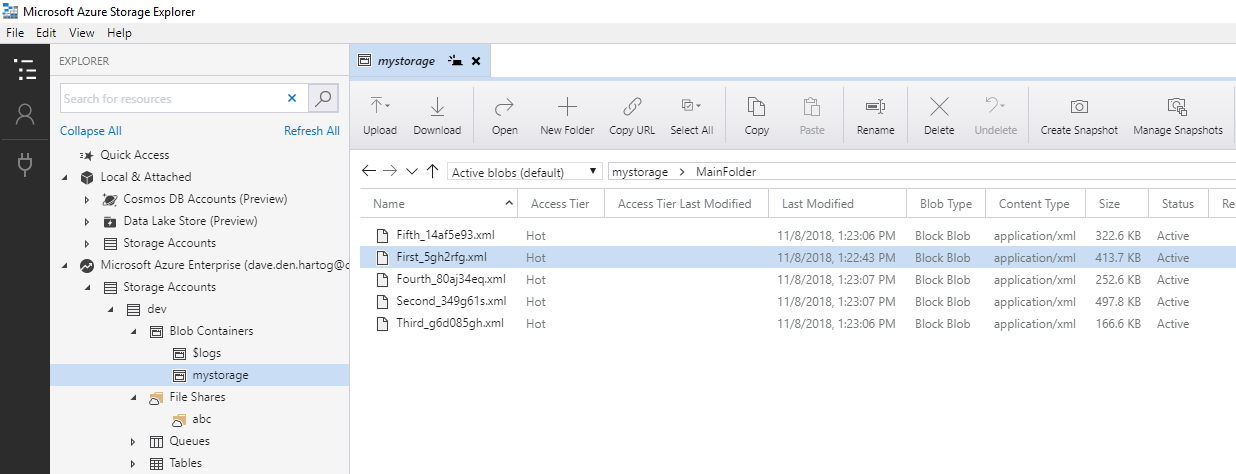

There are 5 files located in the Azure BLOB Storage, and first we will import the file “First_5gh2rfg.xml”. Do be able to do this, a destination table is created in Azure SQL Database. The contents of the XML file will be stored in a column with special data type XML. IMPORTANT => This data type is required to be able to shred the contents of an XML file across multiple tables in an easy way.

Then we can import data from Azure BLOB Storage into Azure SQL Database with a simple query:

INSERT INTO [BI].[XML_IMPORT] (XML_DATA)SELECT CAST(BulkColumn AS XML)FROM OPENROWSET(BULK 'MyStorage/MainFolder/First_5gh2rfg.xml',DATA_SOURCE = 'AzureBlobStorage',SINGLE_BLOB) AS XML_IMPORT

“MyStorage” is the BLOB Container, “MainFolder” the… well… main folder in the BLOB Container and “First_5gh2rfg.xml” one of the XML files in that folder. So, there you have it, importing a single XML file into Azure SQL Database from Azure BLOB Storage is quite easy to do.

The challenge

But what if we want to import multiple XML Files with changing names for each delivery? First, the following limitations are encountered:

- - Use of wildcard “*.xml” is not possible, because an existing file name needs to be provided

- - But the essential part is that Azure SQL Database cannot ask Azure BLOB Storage for a list of files!

Alternatively, you could use Azure Data Factory for the whole import mechanism. In Azure Data Factory you can define various sources and create Pipelines with one or more Activities. With the Copy Data Activity, you can copy data from various sources to various targets. This includes data copying from Azure BLOB Storage to Azure SQL Database. Below such a pipeline is shown.

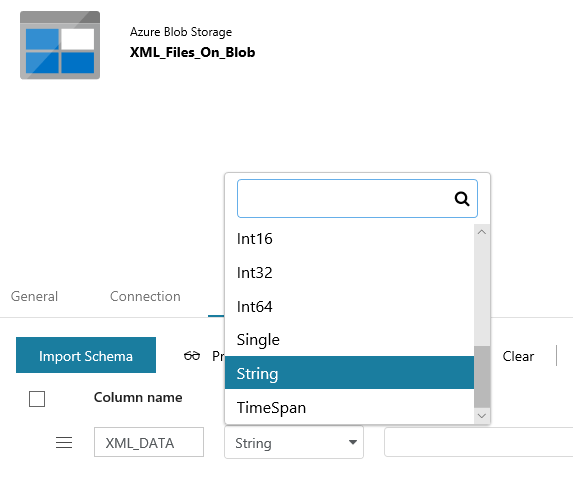

Well now, this looks to be exactly what we need! However, for multiple CSV files this would be a piece of cake… but unfortunately the same cannot be said for XML files which need to be imported into a database column with data type XML. Because the specific data type XML is (currently) not supported, which the following picture shows in the source definition of the Azure BLOB Storage.

If we would use data type String then each line in an XML file will be processed as a separate record, and the structure is lost. To shred the contents of the XML files across multiple tables will then be a challenge on its own. The data type XML also expects a true XML file and will raise an error during load.

The solution

The solution is a hybrid one. Because we can also use Azure Data Factory to get a list of XML file and store that list in Azure SQL Database. After this activity, Azure Data Factory can request Azure SQL Database to process the files based on that list. So here we are, multiple XML files can be imported in one go in Azure SQL Database!

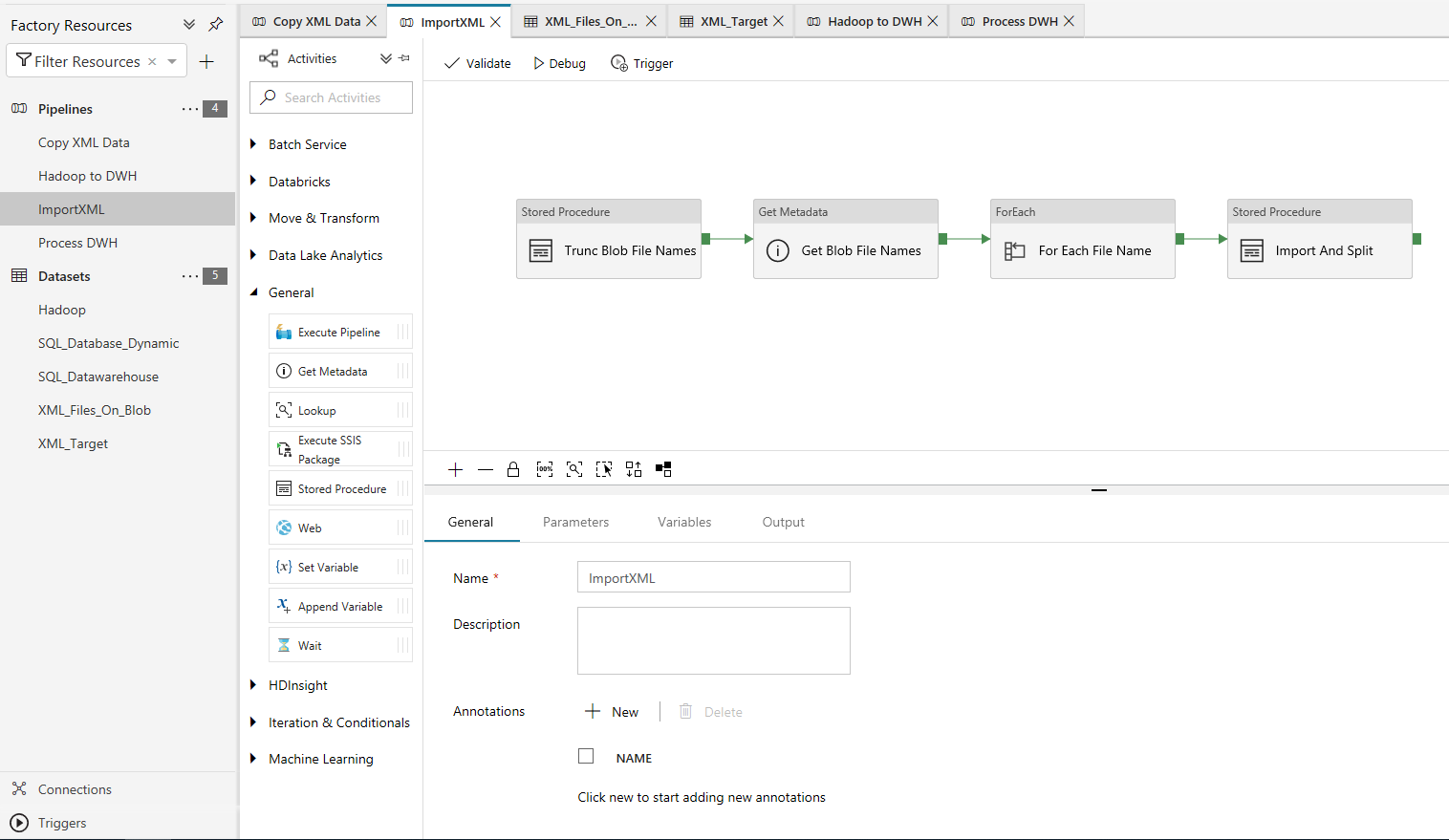

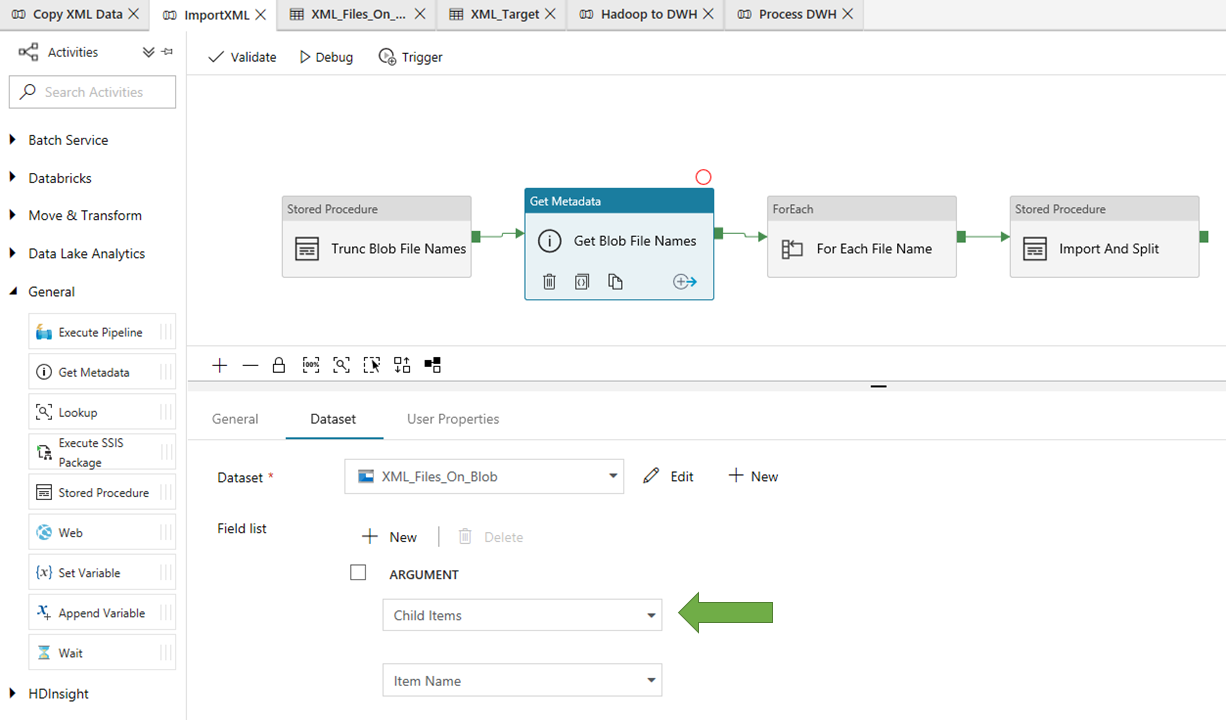

The pipeline looks like this:

Before creating this pipeline, a table is created in Azure SQL Database to store the file names, just like we would normally do in a non-Azure environment.

Activity 1 in the pipeline is truncating the table with file names by calling a Stored Procedure in Azure SQL Database.

Activity 2 and Activity 3 require more explanation, because this is the heart of the solution to retrieve the names of Azure BLOB Storage files and store these names in Azure SQL Database.

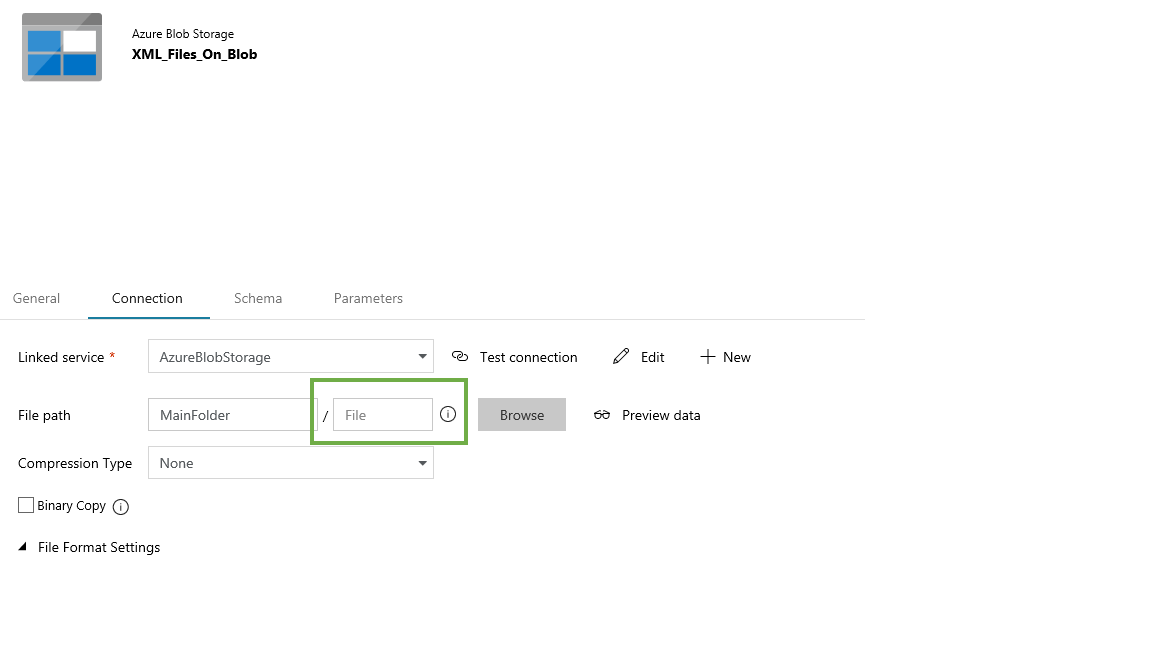

The second Activity is a “Get Metadata” Activity for which two things are crucial, being the dataset Arguments “Child Items” and “Item Name”. In our case these “Child Items” represent the names of the files in the Azure BLOB Storage folder “MainFolder”. That is because the dataset for the BLOB Storage in Azure Data Factory is configured as shown below. As you can see “File path” is filled, but “File” is left empty.

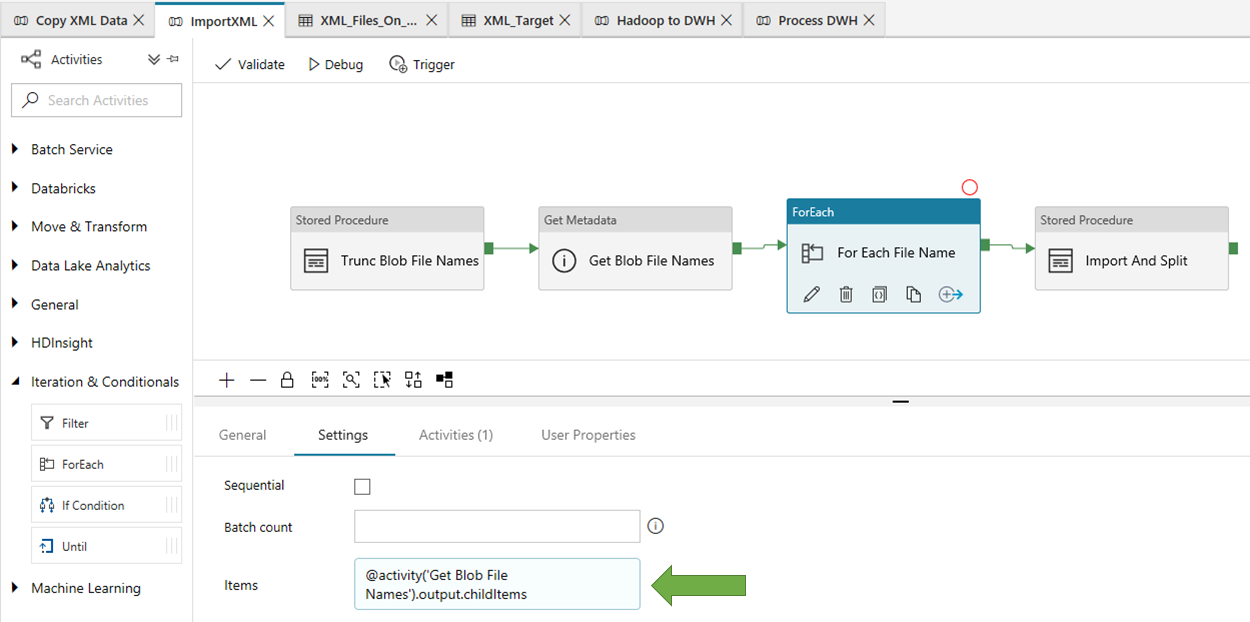

Now back to the pipeline to the third Activity of the type “ForEach”.

In this “ForEach” activity we need to specify “@activity('Get Blob File Names').output.childItems” in the Items setting. This will ensure that for each file in the Azure BLOB Storage folder a certain underlying action can be performed.

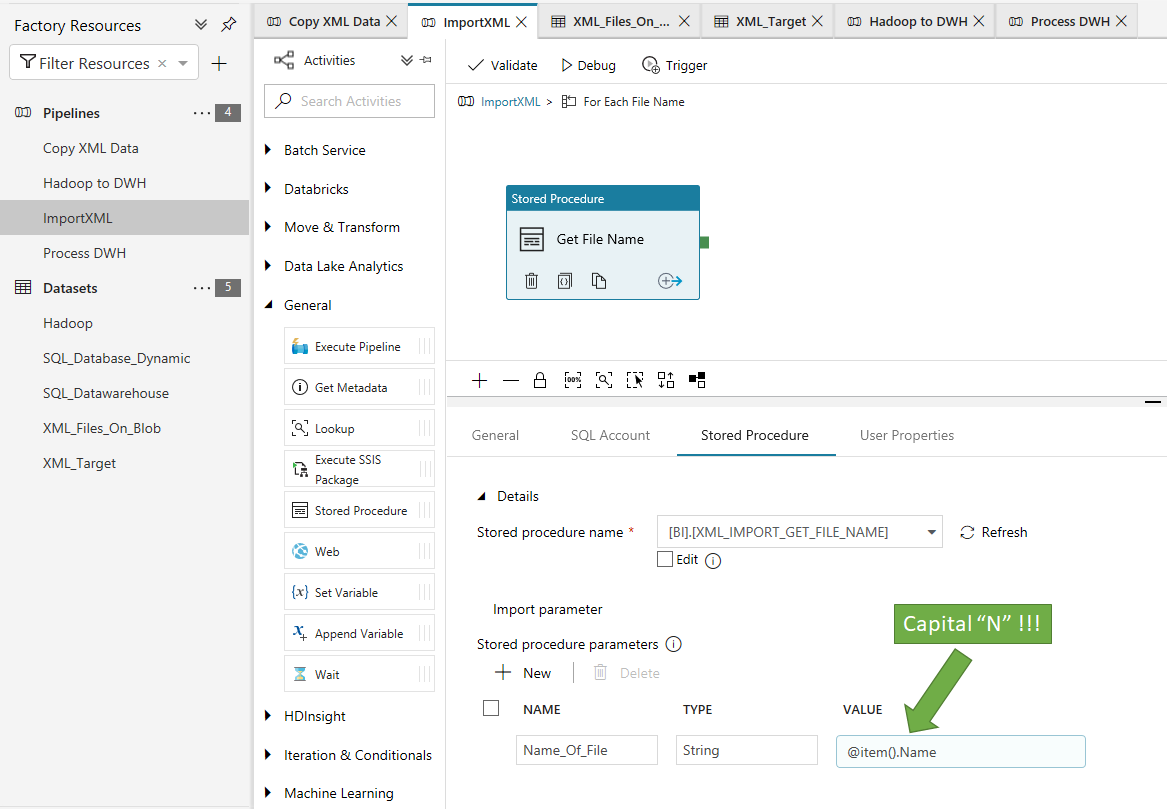

When double clicking on the “ForEach”, the sub activity is shown. In this case, I use a Stored Procedure activity to the Azure Database. This Stored Procedure is setup to do the following:

CREATE PROCEDURE [BI].[XML_IMPORT_GET_FILE_NAME] (@Name_Of_File varchar(max)) ASBEGININSERT INTO BI.BLOB_FILE_NAME SELECT @Name_Of_FileEND

In the @Name_Of_File parameter of this Stored Procedure the XML filename can be provided by Azure Data Factory which will come from the ForEach loop. In Azure Data Factory, you must do this properly, by entering the text “@item().Name” in the parameter of the Stored Procedure sub activity. IMPORTANT => This is case sensitive, so “@item().name” will not work, even though in the underlying code this property is specified as “name” without capital N!

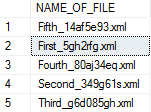

The intermediate result after pipeline Activities 1 to 3 is an Azure SQL Database table filled with all 5 file names from Azure BLOB Storage!

The fourth and final activity is the call of another Stored Procedure in Azure SQL Database. This Stored Procedure will loop through the list of file names and use them to import the XML files into a table with an XML column for further shredding. Just like you would do in a non-Azure environment.

The start of the Stored Procedure is shown below, where you can see that the simple query for importing a single XML file is reused and tweaked a bit. This query is assembled by using the file name coming from the table with file names. The looping is done by means of a cursor.

CREATE PROCEDURE [BI].[XML_IMPORT_AND_SPLIT] ASBEGINDECLARE @name_of_file varchar(8000)

DECLARE cursor_file_names CURSOR FAST_FORWARDFOR SELECT NAME_OF_FILE FROM BI.BLOB_FILE_NAME WHERE NAME_OF_FILE LIKE '%.xml'

OPEN cursor_file_namesFETCH NEXT FROM cursor_file_names into @name_of_file

WHILE @@FETCH_STATUS = 0BEGINEXEC('INSERT INTO [BI].[XML_IMPORT](XML_DATA)SELECT CAST(BulkColumn AS XML)FROM OPENROWSET(BULK ''MyStorage/MainFolder/' + @name_of_file + ''',DATA_SOURCE = ''EDS_AzureBlobStorage'',SINGLE_BLOB) as XML_IMPORT')FETCH NEXT FROM cursor_file_names into @name_of_fileENDDEALLOCATE cursor_file_names

After this section, the procedure also contains the shredding of XML contents across multiple tables, but that’s another topic.

Conclusion

Importing multiple files from Azure BLOB Storage into Azure SQL Database truly is a piece of cake. However, to load multiple XML files into an XML type column, we encounter several limits of individual Azure components. Nevertheless, this can be done properly in Azure as well by making use of an alternative concept and correct settings in Azure Data Factory.